Student evaluations. You've got to laugh. A few weeks ago I was given the results from the evaluations that were done at the end of last semester at one university, and I just got around to opening the envelope.

This is the course evaluation form designed by the school, not my own evaluation form. I think I've mentioned before that I always get my students to fill in a survey at the end of semester. My one is a form I design myself and which the students have to answer in English. The purpose of this is to find out what parts of the course they thought were the most and least useful, to get them to think about what they learned, to ask them if they thought anything important was missing from the course, and so on. All the questions are open-ended.

I give students this form after I have told them their final grades, on the last day, and ask them to reply honestly. I ask them to include their negative comments as well as positive, because negative answers especially help me to improve the classes next semester. I am the only person who will read their answers, I explain. Their grades are already fixed, and they've already had a chance to dispute them if they wish to, in class. (I do this to avoid the tortuous process of having them do this through official channels, later.)

My evaluation forms are not anonymous, because I want to be able to check the answers against the student's record if they have anything especially bad or good to say about the course. For example, if a student tells me he or she couldn't understand what was going on in the class most of the time, I want to know if this was a student who paid attention and was usually on time. If the student was consistently late, or spent class time sending text messages, or sleeping, I give the comment far less weight than I would in the former case.

The students take this survey very seriously, and take a lot of time answering the questions. I get some interesting and helpful answers. (I also get several declarations of love every semester, which is nice.)

But the answers I get on the official student evaluation forms are often quite different, and the students rarely take more than five minutes to fill them out, although there are more questions. Students do not seem to take these as seriously (because they are anonymous?) even though they are more important as far as the school is concerned. I don't think many students know what these evaluations are really for. To them it is just another meaningless form the university requires them to fill out. I can't tell them that their answers will help me, because they won't. If one student writes that my class is too hard, how can I judge that comment? What if that was the student who came to class only twice? I have no way of knowing. In any case I don't get to see the results until they've all been collated, and that is usually after the next semester has already started. Also, students only have to choose a number on a scale to answer each question, not write out answers, which means that quite frequently they will choose the path of least resistance and not bother reading the questions. I notice this when we get the forms back.

What is likely to happen, if the student likes you, is that they read the first question to find out which end of the scale is 'good,' and write the top number. If they want to look more serious they'll write the second-to-top number. (The bottom and second-to-bottom numbers if they don't like you.) Then they go through the entire form without reading the rest of the questions, writing the same number for all of them. This can wreak havoc with your results when suddenly the 'good' answer switches ends, as happened one year with a poorly designed questionnaire. It can also give you an overly good evaluation when most of the questions are on a one to five scale but one or two of the questions only go up to three, as happened another year with yet another poorly designed form. The universities collate all the answers using computer, and the computer does the GIGO thing and spits out an impossible percentage result. (105%! I was a better than fabulous teacher that year, with a very small class of students who liked me.)

I don't know what I scored on this latest evaluation, though - the one I just opened - because the university has suddenly gone all high tech this year and given us the collated results on a (gasp) floppy disk. Finally, we have moved into the twentieth century! I have an old floppy disk reader I hadn't used for a couple of years, and miraculously was able to open the file, but there the miracle ended because I was presented with a page of gibberish, in Word. I don't expect the results to be particularly good, though, because the one really important question at the end of last semester's questionnaire: What is your overall rating for this teacher and class? was suddenly on a 1 - 10 scale, whereas all the other questions were on a 1 - 5 scale. Several students who had written 5 for everything also wrote 5 for the all-important last question. The fact that this simply does not make sense (This teacher is perfect in every way but overall I'll give her 50%???) will not make a jot of difference to anything. The school will have it on my permanent record that I scored low on the overall rating. Idiots.

At another school they must be using the same questionnaire form mentioned in this article, because the same two questions always turn up at the beginning. Every semester I hear my students asking each other, "Syllabus? What syllabus? What does it mean?"

After they sort that out, they generally answer 'yes' to both questions. I think the students like me, there, because the writer of the article speaks of very different results:

What kinds of questions are being asked in the Japanese version of lecture evaluations? As far as I know, most of the questionnaires begin with these questions:This is, of course, absurd, but I am lucky in that my students are kinder liars. They say yes they read the syllabus, which they didn't, and they say yes I followed it, which is also not true. I never do, at that school. That is because I write the syllabus myself, and at the time that I write it I have almost no information about the class beyond the title. I am asked to teach, for example, "Oral Communication 2B," "English Expression 1a" and "English Communication IIB." (Yes, there really is this odd combination of upper and lower case, and Roman and Arabic numerals.) I ask about the students who will be taking these classes, and find out that, say, one of the classes is for English majors and the others are open to all majors. I ask what the course titles mean, and what the difference is between them, and get dusty answers. Nobody is quite sure. The class titles just sounded good, I suppose. What level will the students be? Not sure. Will the class include foreign students? Maybe. How many students can I expect? We'll get back to you, they say, and never do.

Question 1. Did you read the lecture syllabus before first attending class?

Question 2. Did the lecture proceed according to the syllabus?

The problem is that far too many students answer ``no'' to both questions.

So I write a fuzzy syllabus for each class, and ignore it thereafter.

It's worse when the school gives me straight answers, though, because these are usually wrong. I wrote a syllabus for a class I'm teaching this year which I was told would be taken by low-level students, second year, non English majors, around 30 students. What I got was five students. Four are very high level and one is intermediate. I chose a completely unsuitable textbook, based on the information I was given, and my syllabus would bore the students to tears if I took any notice of it. I don't, of course.

I can't remember what I started out intending to say, but I'll end with this.

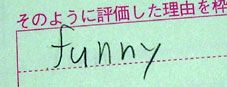

I was just flipping through the evaluation forms they sent back with the floppy disk, and came across one where a student had apparently decided that the comments section had to written in English (it didn't). She (or he) has written:

And that pretty well sums up the whole student evaluation system, if you ask me.

Technorati Tags: Japan, ESL, StudentEvaluations

2 comments:

"For example, if a student tells me he or she couldn't understand what was going on in the class most of the time... If the student was consistently late, or spent class time sending text messages, or sleeping, I give the comment far less weight..."

Catch 22 if you ask me. If one does not understand what is going on, is it likely one will be the keen student whom you would give the time of day?

Of course I take that into account as well. But generally, the student who is consistently late/spent class time sending text messages and so on has been doing it from the start and has never made an effort. Unfortunately there are always one or two of those.

Students who try and still don't get it are different, because I can SEE that happening during class, and do something about it. This happens quite often. A little personal attention works wonders. I get everybody on task, then spend some time with the individuals who are having trouble.

The ones I'm looking for on the surveys, though, are the ones who seemed to be getting it and did fairly well but still felt they didn't really understand. That's where I need to know, so I can be on the lookout for it next semester, and do something about it. I need to know the signs before they happen. Japanese students tend to not give many clues, and you have to learn them.

Post a Comment